I’ve enjoyed Accessibility Scotland since it started in 2016. I was highly anticipating this year’s event. It was so popular that the conference sold out 5 weeks in advance.

Accessibility and Ethics was the theme for Accessibility Scotland 2019. It was a single track conference with 5 speakers on the day. Unusually there was a gender imbalance in favour of women (4 women, 1 man).

While I have summarised the day below, I recommend downloading the speakers’ slides, particularly for Léonie’s talk, which was highly interactive.

View or download Accessibility Scotland 2019’s slides.

If you’d like to read about the previous Accessibility Scotland events, here are my posts:

- Accessibility Scotland: a new event for web accessibility professionals

- Accessibility Scotland 2017 – All The Best In Inclusive Design

- Accessibility Scotland 2018 – How To Understand Users Better

Cat Macaulay – Accessible Public Services – Are We There Yet?

Cat works for the Scottish Government as their Chief Design Officer. Her talk centred on the design of the new Social Security Agency in Scotland, as the administration of 11 UK benefits has been devolved.

Cat has MS and has personal experience of the benefits system. She said that applying for DLA and PIP was:

The worst experience of my life – and I spent most of my 20s working in a war zone.

Cat described designing a service like this as “risky and challenging”. They are never going to please everyone, but she and her team wanted to get service users (people who have applied for benefits) involved early on. They built a panel with users and designers, over two thousand strong.

Service users helped to design a range of aspects of the new agency including:

- The legislation

- The Customer Charter

- How the agency talks to people

- What spaces are used for face-to-face interactions.

https://twitter.com/lauranels/status/1187658779426471936

The key was that everyone should be involved.

If one person is left behind in a democracy, that’s too many.

The trouble with public services

Often people access public services after life-changing events when they are stressed and cognitively disabled. They soon find that those services haven’t been designed with users in mind but round how the sector is structured.

A person may need the aid of several departments or organisations. All have different ways of operating. That means that the Scottish Government, local authorities and NHS all have to come together. Plus the individual needs to be sensitively guided through such a journey.

You can find out more about Cat’s work by following the #SAtSD hashtag on Twitter: it stands for Scottish Approach to Service Design.

Their philosophy is:

- We will work with people, not for them.

- We will share and reuse.

- We will use inclusive and accessible design methods so everyone can participate in design (if they want to).

https://twitter.com/DrHennaKarhapaa/status/1187666433989369861

Further reading

Scottish Approach to Service Design: Creating Conditions for Change

The Scottish Approach to Service Design (SAtSD) framework document

Léonie Watson – I, Human

Léonie has been involved in the accessibility community for years. The Inclusive Design 24 conference is her brainchild, she is on the W3C Advisory Board and is director of Tetra Logical. She blogs at Tink.

Léonie talked about human capabilities and how close AI has come to emulating them, with reference to Asimov’s 3 Laws of Robotics.

What sets us apart from other living beings? We can see, hear, speak and think.

Seeing

To AI, seeing means image recognition.

Léonie showed us 3 images of chairs: a standard wooden chair, a camping chair and one that resembled a spool of thread.

We humans can recognise that they are the same type of object, but machines would have a much harder time. Following simple AI rules wouldn’t always work because the materials and shapes used are so different.

Until 2006 and the advent of cloud computing, machines weren’t very good at image recognition.

Facebook’s image recognition (2016) describes Léonie’s portrait photo as “One person in a room close up.” Not bad, but it misses mentioning her distinctive violet hair!

More recently, Microsoft’s Seeing AI (2017) and Apple’s Face ID use image recognition to help with day-to-day activities.

Seeing AI describes objects in your environment when you hold your phone up to them. (It’s only available on iOS at the time of writing.)

Face ID (2018) recognises an Apple device’s owner’s face. It can be used to unlock a device and pay for products from Apple’s stores or ones accepting Apple Pay.

Hearing

Hearing AIs include speech-to-text apps, and applications that perform actions based on voice input.

Apple had a form of speech recognition for its 1993 Macintosh. A user could open programs, print documents and shut down the computer by voice.

Speech-to-text programs like Dragon NaturallySpeaking are now smart enough to add a comma or a full stop to a sentence without you having to dictate punctuation.

Most recently, the Kinect Sign Language translator project in 2013 managed to translate one sign language to another.

Speaking

Did you know that the first artificial speech machine went back to 1769? I didn’t!

While the early systems produced purely synthetic speech, newer speech programs use blends of real human voices.

Nowadays we are familiar with voice assistants thanks to Siri and Alexa. They are useful for:

- setting timers

- calendar reminders

- toggling lights on and off

- and much more.

One downside is that the voices are fairly monotone and lacking in emphasis.

Léonie demonstrated a standard Alexa voice reading Stephen King’s “It”. Let’s just say that if you were expecting mood and suspense, it didn’t deliver.

For a better experience, you can alter the voice, speed and emphasis of the spoken word using a language called SSML (Speech Synthesis Markup Language).

It’s still not perfect but “Enrique” delivers a better reading than the Alexa default of this immortal line from “The Princess Bride“:

Hello, my name is Inigo Montoya. You killed my father. Prepare to die.

When AI doesn’t go right

Of course, AI is only as good as the humans who program it.

Some examples of AI gone wrong are:

In 2013, IBM’s Watson was fed the Urban Dictionary and learned to swear. Watson had to have the dictionary wiped from its memory bank to clean its potty mouth.

Amazon’s Rekognition mis-identified 28 members of the US Congress as criminals in 2018.

Worst of all was Hanson’s robot Sophia (2016). She was built as a robot companion that could see, hear and speak.

Sounds perfect until she let slip the following in a conversation with her creator:

Dr David Hanson: “Do you want to destroy humans? Please say no.”

Sophia: “OK. I will destroy humans.”

(Incidentally, despite Sophia violating Asimov’s First Law of Robotics that hasn’t stopped the company from creating a Little Sophia.)

Ashley Peacock: Design to build bridges: when to use disability simulation

Ashley runs the digital agency Passio, which specialises in apps and technology for disabled people, with a focus on neurodiversity and cognitive impairment.

Disability simulation is a contentious topic. While a simulation may seem an attractive option to quickly acquire some knowledge of a disability, simulations have a number of drawbacks.

If you simulate a disability and feel pity or fear afterwards, that’s not a good experience.

Ashley spoke about three projects that she had worked on and the methods she used to understand and build for her users.

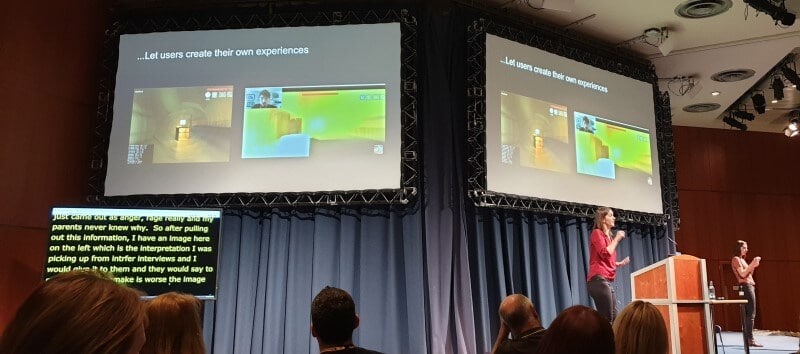

Autism simulation game

Ashley wanted to create an autism simulator to help herself and others better understand the condition.

Her research started with interviewing people on the autism spectrum. She soon realised that sometimes she got answers from people because they thought that’s what she wanted to hear.

So she adapted her methods to ask more open-ended questions, avoid leading her interviewees and have questions drafted by her study group. This led to much more useful data.

The final deliverable was a game. The game’s object was to complete some everyday tasks within a time limit while coping with sensory overload from the environment.

You Tube user Autistic Genius tried it. Here’s his test session:

He commented,

That was actually surprisingly quite accurate and I’m incredibly amazed by that person’s creativity. I’m so impressed. When you had the overload it actually almost felt like I was actually having one.

Neurotypical (non-autistic) users of the app developed similar strategies to play the game as the autistic users.

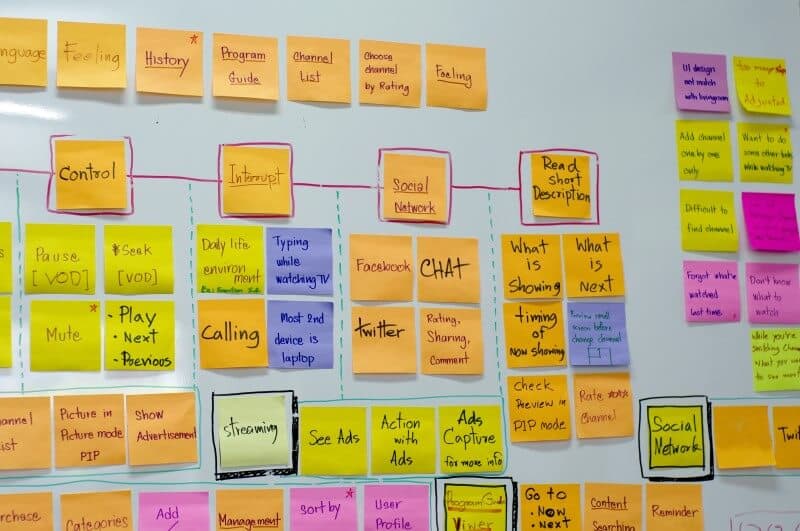

Public transport planning app

The aim was to design an app to help with planning and taking journeys by public transport. It was for a range of impairments.

The challenge with simulation was that the environment was unpredictable and changeable.

Ashley wondered if she could try out using a wheelchair for a day, but was advised against it. Instead, she chose to shadow wheelchair users.

This was a better choice because she realised she would have got tired propelling herself in a chair for a day. In addition, she would have lacked the arm strength for some manoeuvres. She could also witness stress and annoyance in her travelling companions as a neutral observer.

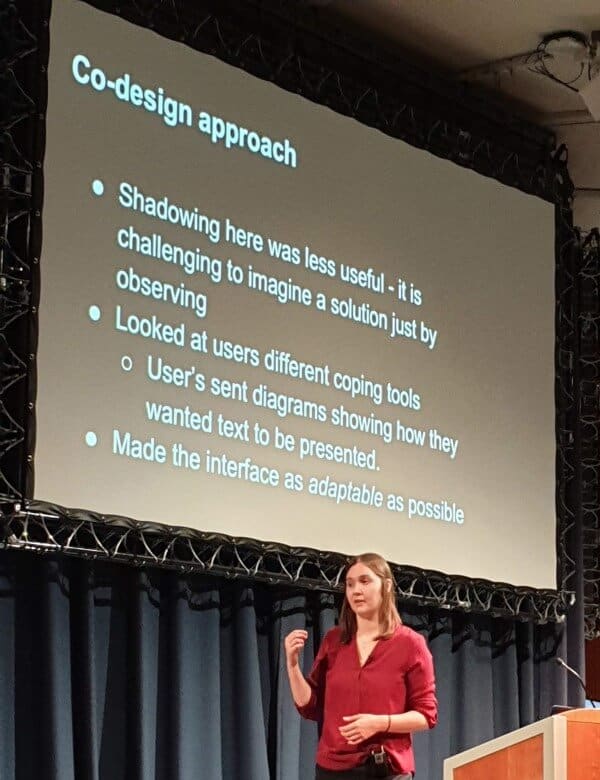

The Read Clear app

This project was to create a reading tool for people with PCA, a rare form of dementia.

Initial research showed that people could read and comprehend two or three words at a time.

The problem? That was fine in theory, but people don’t read like that in practice!

Passio went back to the drawing board and with user involvement, made an app with lots of options, fine-tuning it as they did more user testing.

Lessons learned

- Your users are the experts: get them on board early on.

- Don’t make assumptions.

- Use co-design.

- Learn how to ask the right questions.

- Avoid a “one size fits all” approach.

Matt May – Design without empathy

Matt is the head of Inclusive Design at Adobe. He’s also a fourth generation Scottish American so felt quite at home in Edinburgh.

Why is empathy good?

In the NN Group’s Design Thinking 101 empathy is a key step in the design process.

“Empathize: Conduct research to develop an understanding of your users.”

In AI, an empathic system will ask deep questions. The result is that you feel that the computer feels for you.

Why is empathy bad?

Empathy can be a bad thing if it becomes an expectation or a demand: you should feel X about Y.

Or if you claim you are feeling empathy for someone but are not engaging with the person or people you are trying to understand.

https://twitter.com/marievandries/status/1169242121108369409

Compassion vs empathy

Matt drew a distinction between compassion and empathy.

Empathy = “I feel the things that you feel.”

Compassion = “I understand that you are in need.”

A Buddhist monk was asked to meditate on both, and scientists studied the effects on his brain.

Feeling compassion made him feel warm, positive and prosocial.

Feeling empathy made him feel exhausted and burned out.

Read about the neuroscience study on empathy and compassion.

Ego + empathy can lead to defensive behaviour:

https://twitter.com/mattmay/status/1162025183504322560

In other words:

Designer: “I’ve done the right thing. Why aren’t you grateful for it?”

User: “Because you didn’t ask me if I wanted it.”

Simulations and empathy

Going back to Ashley’s point about the pros and cons of disability simulation, Matt noted that RNIB in the UK support the use of sim specs (glasses which mimic different eye conditions). However, the National Federation of the Blind in the USA do not.

People who use the sim specs have been reported as saying, “I’m glad I don’t have to deal with that all the time.” This sounds more like pity than true understanding of the condition they had a taster of.

Also, a simulation will not allow the participant to experience qualities that someone with a visual impairment might have, such as a better eye for detail.

If it happens at all, simulation should be led by someone who has that experience.

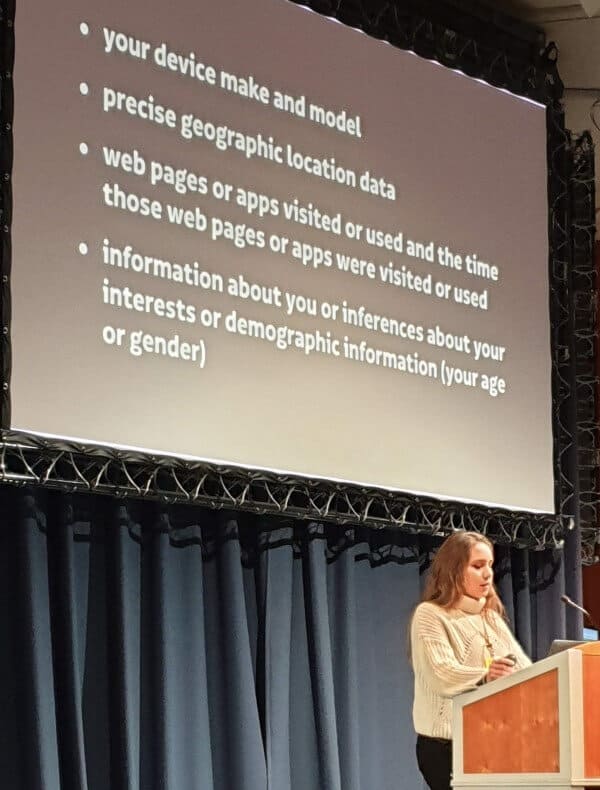

Laura Kalbag – Accessible Unethical Technology

Laura is the author of Accessibility for Everyone, co-founder of the Small Technology Foundation and co-creator of the web privacy tool Better for iOS.

Her talk focused heavily on privacy in the digital age and how that intersects with accessibility.

Who’s following you?

Our daily web and app usage is being tracked by many, many companies. These companies can accumulate and aggregate data on us which allows them to profile us and target ads.

Tracking can be in the form of cookies, browser fingerprinting or web beacons.

Trackers are getting more sophisticated and more harmful as time goes on.

For example, AppNexus tracking is on 16.9% of the top 10,000 sites.

Content blockers like Better have a blacklist of unethical trackers which they block.

https://twitter.com/deanholden/status/1187752011145646087

Protection from online tracking

Laura went through a list of things we could do to protect ourselves, including:

- Don’t log into sites (if that’s not possible, log in and out as soon as you can)

- Use a reputable VPN

- Use private browsing

- Use custom email addresses

- Find alternative software that’s privacy conscious

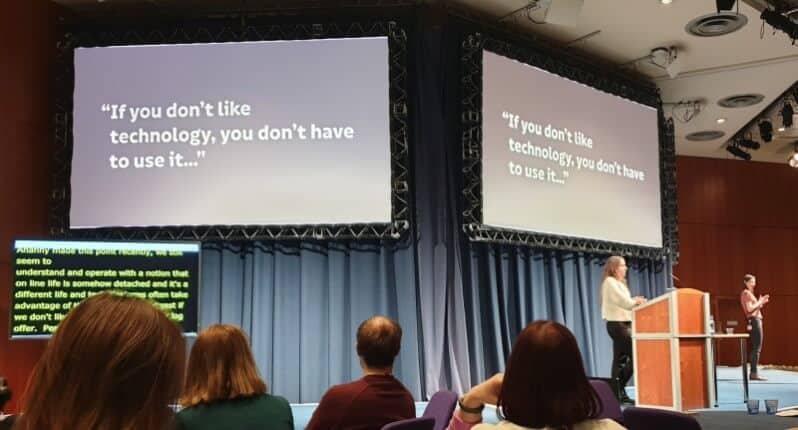

Difficulties with maintaining privacy

There are quite a few barriers that make keeping our online activities private a challenge.

Privacy policies are not written in clear language. A study of 500 privacy policies showed that nearly all needed more than 14 years mainstream education to understand! It’s not real consent if you don’t understand the terms or aren’t given a true choice (agree or don’t use our site).

Mobile privacy is less good than desktop, as apps leak data. Some people only access the Web via a phone, so are automatically at a disadvantage.

Campaigns like #DeleteFacebook are have gained traction in the wake of privacy scandals such as the Cambridge Analytica affair. But ditching Facebook is not reasonable for many disabled people who rely on it for social contact or networking and have no viable ethical alternative.

Data sharing and profiling may erode our privacy but brings convenience, so people are less likely to argue against it.

Minority groups, such as people of colour, poor people or disabled people, are more likely to be affected by privacy issues.

Screen reader detection

Should screen readers be detected in analytics? WebAIM’s 2019 survey of screen reader users showed that 62.5% of respondents would be very or somewhat comfortable with the idea.

While collecting anonymised data on screen reader usage seems like a good idea if it leads to better coded, more accessible sites, there are several powerful arguments against summed up in this post by Heather Burns:

Detecting screen readers in analytics: Pros and cons

Léonie also has a post on screen reader detection and why she is against it. In her words:

My disability is personal to me, and I share that information at my discretion. Proponents of screen reader detection say it would be discretionary, but that’s like choosing between plague and pestilence. Choosing between privacy and accessibility is no choice at all.

Marco Zehe also argues against screen reader detection, citing a loss of anonymity and equality:

It would take away the one place where we as blind people can be relatively undetected without our white cane or guide dog screaming at everybody around us that we’re blind or visually impaired, and therefore giving others a chance to treat us like true equals.

What’s wrong with the way our tech is built?

In an article called Technology Colonialism, Anjuan Simmons writes:

These products are almost always designed by white men for a global audience with little understanding of the diverse interests of end users.

Companies like Facebook, Twitter, and Google are predominantly used by women and minorities whose data and usage patterns are sold for profit. Yet, these groups are often blocked from working at technology companies or encounter abuse if they do manage to gain employment.

https://twitter.com/jessscameron/status/1187762636915724292

What can people who work in the digital space do to make accessible and ethical tech?

- Adopt a Privacy by Design approach.

- Think ethically – put people above profit.

- Make products and services easy to use.

- Design inclusively.

- Involve your users in the design process.

- Make your product functional without gathering personal information.

- Avoid or minimise using third party services.

- Treat users’ personal data with the greatest care and respect.

- Don’t copy and paste your privacy policy – and make it transparent.

- Embrace the open source community: share your work and let others build upon it.

Overall thoughts on Accessibility Scotland 2019

Props to the organisers, who did a fantastic job in organising and sourcing a stellar line-up of speakers. Thanks also to the volunteers on the day who made the event run smoothly.

The Accessibility and Ethics theme provided some excellent talking points and perspectives. The flip side was that some of the speakers’ points overlapped.

Léonie’s presentation was my favourite of the day. It was educational, thought-provoking and fun!

As well as an accessible venue, captioning and BSL interpretation of the talks, there was a quiet room available on the day.

Lightning talks were also new this year in the lunch break (though I chose not to attend).

https://twitter.com/wojtekkutyla/status/1187703267335254017

I got to meet up with old friends…

And make new ones…

If I had any gripes, they would be about the catering. Tea and coffee were only available first thing and in the afternoon break. I wasn’t the only one who nipped out of the conference centre in search of caffeine at lunchtime! Also, the lunch food labels were on a board near the tables. By the time I got to choosing items I’d forgotten what the ingredients were. I would have also liked salad and fruit available.

So that’s a wrap on Accessibility Scotland 2019. Roll on 2020!

Like this post? Please share!

[…] For a more comprehensive overview of the event, Claire Brotherton’s write up worth a look, but read ours first, eh? Here’s a link to Claires: https://www.abrightclearweb.com/accessibility-scotland-2019-accessibility-and-ethics/ […]